My ultimate goal is to re-direct all internet traffic on my home network through my little arch linux box using squid and dansguardian.

I followed this gentleman's tutorial...it had it's hurtles but I got everything installed successfully. Now, I haven't done the last step of configuring my router just yet...as I wanted to use a PC (running windows), set Internet Explorer to use the proxy and make sure it works. Well...I can't seem to get it to work. Page just comes up as "cannot be displayed".

I'm hoping that it is just some simple setting that I need to tweak. The IP range on my network is 192.168.0.200 - 250...which I've reflected in my squid.conf. The IP of my arch linux (pogo plug) box is 192.168.0.222. Below are my squid.conf and dansguardian.conf. Any help would be greatly appreciated!

Oh, for testing, I've just been going into IE, tools, 'connections' tab, then into LAN Settings and checking the proxy box...putting in the IP of my pogo plug and port 8080. I've tried port 80...and 3128 as well...still can't load pages when I have the proxy set.

Do I need to change the proxyip in my dansguardian.conf?

Thanks again.

dansguardian.conf:

$this->bbcode_second_pass_code('', '# DansGuardian config file for version 2.10.1.1

# **NOTE** as of version 2.7.5 most of the list files are now in dansguardianf1.conf

# Web Access Denied Reporting (does not affect logging)

#

# -1 = log, but do not block - Stealth mode

# 0 = just say 'Access Denied'

# 1 = report why but not what denied phrase

# 2 = report fully

# 3 = use HTML template file (accessdeniedaddress ignored) - recommended

#

reportinglevel = 3

# Language dir where languages are stored for internationalisation.

# The HTML template within this dir is only used when reportinglevel

# is set to 3. When used, DansGuardian will display the HTML file instead of

# using the perl cgi script. This option is faster, cleaner

# and easier to customise the access denied page.

# The language file is used no matter what setting however.

#

languagedir = '/usr/share/dansguardian/languages'

# language to use from languagedir.

language = 'ukenglish'

# Logging Settings

#

# 0 = none 1 = just denied 2 = all text based 3 = all requests

loglevel = 2

# Log Exception Hits

# Log if an exception (user, ip, URL, phrase) is matched and so

# the page gets let through. Can be useful for diagnosing

# why a site gets through the filter.

# 0 = never log exceptions

# 1 = log exceptions, but do not explicitly mark them as such

# 2 = always log & mark exceptions (default)

logexceptionhits = 2

# Log File Format

# 1 = DansGuardian format (space delimited)

# 2 = CSV-style format

# 3 = Squid Log File Format

# 4 = Tab delimited

logfileformat = 1

# truncate large items in log lines

#maxlogitemlength = 400

# anonymize logs (blank out usernames & IPs)

#anonymizelogs = on

# Syslog logging

#

# Use syslog for access logging instead of logging to the file

# at the defined or built-in "loglocation"

#syslog = on

# Log file location

#

# Defines the log directory and filename.

#loglocation = '/var/log/dansguardian/access.log'

# Statistics log file location

#

# Defines the stat file directory and filename.

# Only used in conjunction with maxips > 0

# Once every 3 minutes, the current number of IPs in the cache, and the most

# that have been in the cache since the daemon was started, are written to this

# file. IPs persist in the cache for 7 days.

#statlocation = '/var/log/dansguardian/stats'

# Network Settings

#

# the IP that DansGuardian listens on. If left blank DansGuardian will

# listen on all IPs. That would include all NICs, loopback, modem, etc.

# Normally you would have your firewall protecting this, but if you want

# you can limit it to a certain IP. To bind to multiple interfaces,

# specify each IP on an individual filterip line.

filterip =

# the port that DansGuardian listens to.

filterport = 8888

# the ip of the proxy (default is the loopback - i.e. this server)

proxyip = 127.0.0.1

# the port DansGuardian connects to proxy on

proxyport = 3128

# Whether to retrieve the original destination IP in transparent proxy

# setups and check it against the domain pulled from the HTTP headers.

#

# Be aware that when visiting sites which use a certain type of round-robin

# DNS for load balancing, DG may mark requests as invalid unless DG gets

# exactly the same answers to its DNS requests as clients. The chances of

# this happening can be increased if all clients and servers on the same LAN

# make use of a local, caching DNS server instead of using upstream DNS

# directly.

#

# See http://www.kb.cert.org/vuls/id/435052

# on (default) | off

#!! Not compiled !! originalip = on

# accessdeniedaddress is the address of your web server to which the cgi

# dansguardian reporting script was copied. Only used in reporting levels 1 and 2.

#

# This webserver must be either:

# 1. Non-proxied. Either a machine on the local network, or listed as an exception

# in your browser's proxy configuration.

# 2. Added to the exceptionsitelist. Option 1 is preferable; this option is

# only for users using both transparent proxying and a non-local server

# to host this script.

#

# Individual filter groups can override this setting in their own configuration.

#

accessdeniedaddress = 'http://YOURSERVER.YOURDOMAIN/cgi-bin/dansguardian.pl'

# Non standard delimiter (only used with accessdeniedaddress)

# To help preserve the full banned URL, including parameters, the variables

# passed into the access denied CGI are separated using non-standard

# delimiters. This can be useful to ensure correct operation of the filter

# bypass modes. Parameters are split using "::" in place of "&", and "==" in

# place of "=".

# Default is enabled, but to go back to the standard mode, disable it.

nonstandarddelimiter = on

# Banned image replacement

# Images that are banned due to domain/url/etc reasons including those

# in the adverts blacklists can be replaced by an image. This will,

# for example, hide images from advert sites and remove broken image

# icons from banned domains.

# on (default) | off

usecustombannedimage = on

custombannedimagefile = '/usr/share/dansguardian/transparent1x1.gif'

# Filter groups options

# filtergroups sets the number of filter groups. A filter group is a set of content

# filtering options you can apply to a group of users. The value must be 1 or more.

# DansGuardian will automatically look for dansguardianfN.conf where N is the filter

# group. To assign users to groups use the filtergroupslist option. All users default

# to filter group 1. You must have some sort of authentication to be able to map users

# to a group. The more filter groups the more copies of the lists will be in RAM so

# use as few as possible.

filtergroups = 1

filtergroupslist = '/etc/dansguardian/lists/filtergroupslist'

# Authentication files location

bannediplist = '/etc/dansguardian/lists/bannediplist'

exceptioniplist = '/etc/dansguardian/lists/exceptioniplist'

# Show weighted phrases found

# If enabled then the phrases found that made up the total which excedes

# the naughtyness limit will be logged and, if the reporting level is

# high enough, reported. on | off

showweightedfound = on

# Weighted phrase mode

# There are 3 possible modes of operation:

# 0 = off = do not use the weighted phrase feature.

# 1 = on, normal = normal weighted phrase operation.

# 2 = on, singular = each weighted phrase found only counts once on a page.

#

weightedphrasemode = 2

# Positive (clean) result caching for URLs

# Caches good pages so they don't need to be scanned again.

# It also works with AV plugins.

# 0 = off (recommended for ISPs with users with disimilar browsing)

# 1000 = recommended for most users

# 5000 = suggested max upper limit

# If you're using an AV plugin then use at least 5000.

urlcachenumber = 1000

#

# Age before they are stale and should be ignored in seconds

# 0 = never

# 900 = recommended = 15 mins

urlcacheage = 900

# Clean cache for content (AV) scan results

# By default, to save CPU, files scanned and found to be

# clean are inserted into the clean cache and NOT scanned

# again for a while. If you don't like this then choose

# to disable it.

# (on|off) default = on.

scancleancache = on

# Smart, Raw and Meta/Title phrase content filtering options

# Smart is where the multiple spaces and HTML are removed before phrase filtering

# Raw is where the raw HTML including meta tags are phrase filtered

# Meta/Title is where only meta and title tags are phrase filtered (v. quick)

# CPU usage can be effectively halved by using setting 0 or 1 compared to 2

# 0 = raw only

# 1 = smart only

# 2 = both of the above (default)

# 3 = meta/title

phrasefiltermode = 2

# Lower casing options

# When a document is scanned the uppercase letters are converted to lower case

# in order to compare them with the phrases. However this can break Big5 and

# other 16-bit texts. If needed preserve the case. As of version 2.7.0 accented

# characters are supported.

# 0 = force lower case (default)

# 1 = do not change case

# 2 = scan first in lower case, then in original case

preservecase = 0

# Note:

# If phrasefiltermode and preserve case are both 2, this equates to 4 phrase

# filtering passes. If you have a large enough userbase for this to be a

# worry, and need to filter pages in exotic character encodings, it may be

# better to run two instances on separate servers: one with preservecase 1

# (and possibly forcequicksearch 1) and non ASCII/UTF-8 phrase lists, and one

# with preservecase 0 and ASCII/UTF-8 lists.

# Hex decoding options

# When a document is scanned it can optionally convert %XX to chars.

# If you find documents are getting past the phrase filtering due to encoding

# then enable. However this can break Big5 and other 16-bit texts.

# off = disabled (default)

# on = enabled

hexdecodecontent = off

# Force Quick Search rather than DFA search algorithm

# The current DFA implementation is not totally 16-bit character compatible

# but is used by default as it handles large phrase lists much faster.

# If you wish to use a large number of 16-bit character phrases then

# enable this option.

# off (default) | on (Big5 compatible)

forcequicksearch = off

# Reverse lookups for banned site and URLs.

# If set to on, DansGuardian will look up the forward DNS for an IP URL

# address and search for both in the banned site and URL lists. This would

# prevent a user from simply entering the IP for a banned address.

# It will reduce searching speed somewhat so unless you have a local caching

# DNS server, leave it off and use the Blanket IP Block option in the

# bannedsitelist file instead.

reverseaddresslookups = off

# Reverse lookups for banned and exception IP lists.

# If set to on, DansGuardian will look up the forward DNS for the IP

# of the connecting computer. This means you can put in hostnames in

# the exceptioniplist and bannediplist.

# If a client computer is matched against an IP given in the lists, then the

# IP will be recorded in any log entries; if forward DNS is successful and a

# match occurs against a hostname, the hostname will be logged instead.

# It will reduce searching speed somewhat so unless you have a local DNS server,

# leave it off.

reverseclientiplookups = off

# Perform reverse lookups on client IPs for successful requests.

# If set to on, DansGuardian will look up the forward DNS for the IP

# of the connecting computer, and log host names (where available) rather than

# IPs against requests.

# This is not dependent on reverseclientiplookups being enabled; however, if it

# is, enabling this option does not incur any additional forward DNS requests.

logclienthostnames = off

# Build bannedsitelist and bannedurllist cache files.

# This will compare the date stamp of the list file with the date stamp of

# the cache file and will recreate as needed.

# If a bsl or bul .processed file exists, then that will be used instead.

# It will increase process start speed by 300%. On slow computers this will

# be significant. Fast computers do not need this option. on | off

createlistcachefiles = on

# POST protection (web upload and forms)

# does not block forms without any file upload, i.e. this is just for

# blocking or limiting uploads

# measured in kibibytes after MIME encoding and header bumph

# use 0 for a complete block

# use higher (e.g. 512 = 512Kbytes) for limiting

# use -1 for no blocking

#maxuploadsize = 512

#maxuploadsize = 0

maxuploadsize = -1

# Max content filter size

# Sometimes web servers label binary files as text which can be very

# large which causes a huge drain on memory and cpu resources.

# To counter this, you can limit the size of the document to be

# filtered and get it to just pass it straight through.

# This setting also applies to content regular expression modification.

# The value must not be higher than maxcontentramcachescansize

# The size is in Kibibytes - eg 2048 = 2Mb

# use 0 to set it to maxcontentramcachescansize

maxcontentfiltersize = 256

# Max content ram cache scan size

# This is only used if you use a content scanner plugin such as AV

# This is the max size of file that DG will download and cache

# in RAM. After this limit is reached it will cache to disk

# This value must be less than or equal to maxcontentfilecachescansize.

# The size is in Kibibytes - eg 10240 = 10Mb

# use 0 to set it to maxcontentfilecachescansize

# This option may be ignored by the configured download manager.

maxcontentramcachescansize = 2000

# Max content file cache scan size

# This is only used if you use a content scanner plugin such as AV

# This is the max size file that DG will download

# so that it can be scanned or virus checked.

# This value must be greater or equal to maxcontentramcachescansize.

# The size is in Kibibytes - eg 10240 = 10Mb

maxcontentfilecachescansize = 20000

# File cache dir

# Where DG will download files to be scanned if too large for the

# RAM cache.

filecachedir = '/tmp'

# Delete file cache after user completes download

# When a file gets save to temp it stays there until it is deleted.

# You can choose to have the file deleted when the user makes a sucessful

# download. This will mean if they click on the link to download from

# the temp store a second time it will give a 404 error.

# You should configure something to delete old files in temp to stop it filling up.

# on|off (defaults to on)

deletedownloadedtempfiles = on

# Initial Trickle delay

# This is the number of seconds a browser connection is left waiting

# before first being sent *something* to keep it alive. The

# *something* depends on the download manager chosen.

# Do not choose a value too low or normal web pages will be affected.

# A value between 20 and 110 would be sensible

# This may be ignored by the configured download manager.

initialtrickledelay = 20

# Trickle delay

# This is the number of seconds a browser connection is left waiting

# before being sent more *something* to keep it alive. The

# *something* depends on the download manager chosen.

# This may be ignored by the configured download manager.

trickledelay = 10

# Download Managers

# These handle downloads of files to be filtered and scanned.

# They differ in the method they deal with large downloads.

# Files usually need to be downloaded 100% before they can be

# filtered and scanned before being sent on to the browser.

# Normally the browser can just wait, but with content scanning,

# for example to AV, the browser may timeout or the user may get

# confused so the download manager has to do some sort of

# 'keep alive'.

#

# There are various methods possible but not all are included.

# The author does not have the time to write them all so I have

# included a plugin systam. Also, not all methods work with all

# browsers and clients. Specifically some fancy methods don't

# work with software that downloads updates. To solve this,

# each plugin can support a regular expression for matching

# the client's user-agent string, and lists of the mime types

# and extensions it should manage.

#

# Note that these are the matching methods provided by the base plugin

# code, and individual plugins may override or add to them.

# See the individual plugin conf files for supported options.

#

# The plugins are matched in the order you specify and the last

# one is forced to match as the default, regardless of user agent

# and other matching mechanisms.

#

downloadmanager = '/etc/dansguardian/downloadmanagers/fancy.conf'

##!! Not compiled !! downloadmanager = '/etc/dansguardian/downloadmanagers/trickle.conf'

downloadmanager = '/etc/dansguardian/downloadmanagers/default.conf'

# Content Scanners (Also known as AV scanners)

# These are plugins that scan the content of all files your browser fetches

# for example to AV scan. The options are limitless. Eventually all of

# DansGuardian will be plugin based. You can have more than one content

# scanner. The plugins are run in the order you specify.

# This is one of the few places you can have multiple options of the same name.

#

# Some of the scanner(s) require 3rd party software and libraries eg clamav.

# See the individual plugin conf file for more options (if any).

#

#!! Not compiled !! contentscanner = '/etc/dansguardian/contentscanners/clamav.conf'

#!! Not compiled !! contentscanner = '/etc/dansguardian/contentscanners/clamdscan.conf'

#!! Unimplemented !! contentscanner = '/etc/dansguardian/contentscanners/kavav.conf'

#!! Not compiled !! contentscanner = '/etc/dansguardian/contentscanners/kavdscan.conf'

#!! Not compiled !! contentscanner = '/etc/dansguardian/contentscanners/icapscan.conf'

#!! Not compiled !! contentscanner = '/etc/dansguardian/contentscanners/commandlinescan.conf'

# Content scanner timeout

# Some of the content scanners support using a timeout value to stop

# processing (eg AV scanning) the file if it takes too long.

# If supported this will be used.

# The default of 60 seconds is probably reasonable.

contentscannertimeout = 60

# Content scan exceptions

# If 'on' exception sites, urls, users etc will be scanned

# This is probably not desirable behavour as exceptions are

# supposed to be trusted and will increase load.

# Correct use of grey lists are a better idea.

# (on|off) default = off

contentscanexceptions = off

# Auth plugins

# These replace the usernameidmethod* options in previous versions. They

# handle the extraction of client usernames from various sources, such as

# Proxy-Authorisation headers and ident servers, enabling requests to be

# handled according to the settings of the user's filter group.

# Multiple plugins can be specified, and will be queried in order until one

# of them either finds a username or throws an error. For example, if Squid

# is configured with both NTLM and Basic auth enabled, and both the 'proxy-basic'

# and 'proxy-ntlm' auth plugins are enabled here, then clients which do not support

# NTLM can fall back to Basic without sacrificing access rights.

#

# If you do not use multiple filter groups, you need not specify this option.

#

#authplugin = '/etc/dansguardian/authplugins/proxy-basic.conf'

#authplugin = '/etc/dansguardian/authplugins/proxy-digest.conf'

#!! Not compiled !! authplugin = '/etc/dansguardian/authplugins/proxy-ntlm.conf'

#authplugin = '/etc/dansguardian/authplugins/ident.conf'

#authplugin = '/etc/dansguardian/authplugins/ip.conf'

# Re-check replaced URLs

# As a matter of course, URLs undergo regular expression search/replace (urlregexplist)

# *after* checking the exception site/URL/regexpURL lists, but *before* checking against

# the banned site/URL lists, allowing certain requests that would be matched against the

# latter in their original state to effectively be converted into grey requests.

# With this option enabled, the exception site/URL/regexpURL lists are also re-checked

# after replacement, making it possible for URL replacement to trigger exceptions based

# on them.

# Defaults to off.

recheckreplacedurls = off

# Misc settings

# if on it adds an X-Forwarded-For: <clientip> to the HTTP request

# header. This may help solve some problem sites that need to know the

# source ip. on | off

forwardedfor = off

# if on it uses the X-Forwarded-For: <clientip> to determine the client

# IP. This is for when you have squid between the clients and DansGuardian.

# Warning - headers are easily spoofed. on | off

usexforwardedfor = off

# if on it logs some debug info regarding fork()ing and accept()ing which

# can usually be ignored. These are logged by syslog. It is safe to leave

# it on or off

logconnectionhandlingerrors = on

# Fork pool options

# If on, this causes DG to write to the log file whenever child processes are

# created or destroyed (other than by crashes). This information can help in

# understanding and tuning the following parameters, but is not generally

# useful in production.

logchildprocesshandling = off

# sets the maximum number of processes to spawn to handle the incoming

# connections. Max value usually 250 depending on OS.

# On large sites you might want to try 180.

maxchildren = 120

# sets the minimum number of processes to spawn to handle the incoming connections.

# On large sites you might want to try 32.

minchildren = 8

# sets the minimum number of processes to be kept ready to handle connections.

# On large sites you might want to try 8.

minsparechildren = 4

# sets the minimum number of processes to spawn when it runs out

# On large sites you might want to try 10.

preforkchildren = 6

# sets the maximum number of processes to have doing nothing.

# When this many are spare it will cull some of them.

# On large sites you might want to try 64.

maxsparechildren = 32

# sets the maximum age of a child process before it croaks it.

# This is the number of connections they handle before exiting.

# On large sites you might want to try 10000.

maxagechildren = 500

# Sets the maximum number client IP addresses allowed to connect at once.

# Use this to set a hard limit on the number of users allowed to concurrently

# browse the web. Set to 0 for no limit, and to disable the IP cache process.

maxips = 0

# Process options

# (Change these only if you really know what you are doing).

# These options allow you to run multiple instances of DansGuardian on a single machine.

# Remember to edit the log file path above also if that is your intention.

# IPC filename

#

# Defines IPC server directory and filename used to communicate with the log process.

ipcfilename = '/tmp/.dguardianipc'

# URL list IPC filename

#

# Defines URL list IPC server directory and filename used to communicate with the URL

# cache process.

urlipcfilename = '/tmp/.dguardianurlipc'

# IP list IPC filename

#

# Defines IP list IPC server directory and filename, for communicating with the client

# IP cache process.

ipipcfilename = '/tmp/.dguardianipipc'

# PID filename

#

# Defines process id directory and filename.

#pidfilename = '/var/run/dansguardian.pid'

# Disable daemoning

# If enabled the process will not fork into the background.

# It is not usually advantageous to do this.

# on|off (defaults to off)

nodaemon = off

# Disable logging process

# on|off (defaults to off)

nologger = off

# Enable logging of "ADs" category blocks

# on|off (defaults to off)

logadblocks = off

# Enable logging of client User-Agent

# Some browsers will cause a *lot* of extra information on each line!

# on|off (defaults to off)

loguseragent = off

# Daemon runas user and group

# This is the user that DansGuardian runs as. Normally the user/group nobody.

# Uncomment to use. Defaults to the user set at compile time.

# Temp files created during virus scanning are given owner and group read

# permissions; to use content scanners based on external processes, such as

# clamdscan, the two processes must run with either the same group or user ID.

#daemonuser = 'nobody'

#daemongroup = 'nobody'

# Soft restart

# When on this disables the forced killing off all processes in the process group.

# This is not to be confused with the -g run time option - they are not related.

# on|off (defaults to off)

softrestart = off

# Mail program

# Path (sendmail-compatible) email program, with options.

# Not used if usesmtp is disabled (filtergroup specific).

mailer = '/usr/sbin/sendmail -t'

')

squid.conf:

$this->bbcode_second_pass_code('', '#

# Recommended minimum configuration:

#

# Example rule allowing access from your local networks.

# Adapt to list your (internal) IP networks from where browsing

# should be allowed

acl localnet src 10.0.0.0/8 # RFC1918 possible internal network

acl localnet src 172.16.0.0/12 # RFC1918 possible internal network

acl localnet src 192.168.0.0/200 # RFC1918 possible internal network

acl localnet src fc00::/7 # RFC 4193 local private network range

acl localnet src fe80::/10 # RFC 4291 link-local (directly plugged) machines

# This line added by Sean

#acl localhost src 127.0.0.1/32

#these lines below give errors and kills squid...so maybe they are for an older version?

#httpd_accel_host virtual

#httpd_accel_port 80

#httpd_accel_single_host off

#httpd_accel_with_proxy on

#httpd_accel_uses_host_header on

acl SSL_ports port 443

acl Safe_ports port 80 # http

acl Safe_ports port 21 # ftp

acl Safe_ports port 443 # https

acl Safe_ports port 70 # gopher

acl Safe_ports port 210 # wais

acl Safe_ports port 1025-65535 # unregistered ports

acl Safe_ports port 280 # http-mgmt

acl Safe_ports port 488 # gss-http

acl Safe_ports port 591 # filemaker

acl Safe_ports port 777 # multiling http

acl CONNECT method CONNECT

#

# Recommended minimum Access Permission configuration:

#

# Deny requests to certain unsafe ports

http_access deny !Safe_ports

# Deny CONNECT to other than secure SSL ports

http_access deny CONNECT !SSL_ports

# Only allow cachemgr access from localhost

http_access allow localhost manager

http_access deny manager

# We strongly recommend the following be uncommented to protect innocent

# web applications running on the proxy server who think the only

# one who can access services on "localhost" is a local user

#http_access deny to_localhost

#

# INSERT YOUR OWN RULE(S) HERE TO ALLOW ACCESS FROM YOUR CLIENTS

#

# Example rule allowing access from your local networks.

# Adapt localnet in the ACL section to list your (internal) IP networks

# from where browsing should be allowed

http_access allow localnet

http_access allow localhost

# And finally deny all other access to this proxy

http_access deny all

# Squid normally listens to port 3128

#http_port 3128 # this was the original line

http_port 3128 transparent

# Uncomment and adjust the following to add a disk cache directory.

#cache_dir ufs /var/cache/squid 100 16 256

#Sean added these in

#cache_effective_user proxy

#cache_effective_group proxy

# Leave coredumps in the first cache dir

coredump_dir /var/cache/squid

#

# Add any of your own refresh_pattern entries above these.

#

refresh_pattern ^ftp: 1440 20% 10080

refresh_pattern ^gopher: 1440 0% 1440

refresh_pattern -i (/cgi-bin/|\?) 0 0% 0

refresh_pattern . 0 20% 4320

#Sean added DNS Name Servers

dns_nameservers 208.67.222.123, 208.67.220.123 #OpenDNS FamilyShield DNS

')

running squid and dansguarndian on a pogoplug for a home net

8 posts

• Page 1 of 1

Re: running squid and dansguarndian on a pogoplug for a home

About tedious checking the proxy box on all machines you want to use dansguard:

Use http://translate.google.com

http://www.monitor.si/clanek/filtriranj ... om/121378/

relevant part:

$this->bbcode_second_pass_quote('', '

')Zapiranje stranskih izhodov

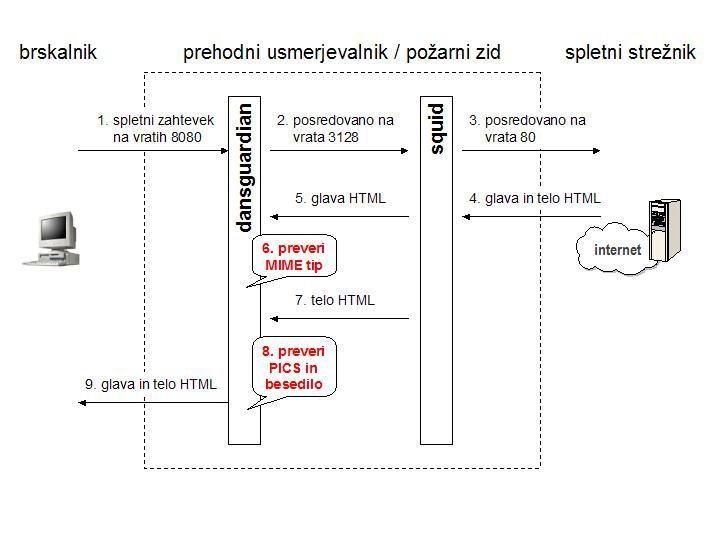

S slike 6 je razvidno potovanje spletne strani skozi TCP/IP vrata na prehodnem usmerjevalniku. Dansguardian posluša na vratih 8080, to so sicer standardna vrata za posredniški predpomnilnik. Če želimo uporabiti njegove storitve, moramo brskalnik prenastaviti s standardnih vrat 80 na vrata 8080. To bi morali narediti na vseh delovnih postajah. Če bi potem hoteli zapreti vrata 80 na prehodnem usmerjevalniku, da se uporabniki ne bi mogli izmuzniti mimo filtra skoznje v divjino spleta, bi morali spremeniti pravila še tam.

S precej manj truda in bolj zvito je mogoče isto doseči s preusmeritvijo med vrati na prehodnem usmerjevalniku. V nastavitev požarnega zidu dodamo vrstico:

# ACTION SOURCE DEST PROTO DEST PORT

# Preusmeri ves WWW promet z vrat 80 na vrata 8080

REDIRECT loc 8080 tcp www

Po tem popravku lahko brskalnik v krajevnem omrežju deluje na običajnih vratih 80, uporabniki pa nimajo nobenega stranskega izhoda mimo filtra, saj se ves spletni promet skozi preusmeritev odpelje naravnost v filter.

Use http://translate.google.com

http://www.monitor.si/clanek/filtriranj ... om/121378/

relevant part:

$this->bbcode_second_pass_quote('', '

')Zapiranje stranskih izhodov

S slike 6 je razvidno potovanje spletne strani skozi TCP/IP vrata na prehodnem usmerjevalniku. Dansguardian posluša na vratih 8080, to so sicer standardna vrata za posredniški predpomnilnik. Če želimo uporabiti njegove storitve, moramo brskalnik prenastaviti s standardnih vrat 80 na vrata 8080. To bi morali narediti na vseh delovnih postajah. Če bi potem hoteli zapreti vrata 80 na prehodnem usmerjevalniku, da se uporabniki ne bi mogli izmuzniti mimo filtra skoznje v divjino spleta, bi morali spremeniti pravila še tam.

S precej manj truda in bolj zvito je mogoče isto doseči s preusmeritvijo med vrati na prehodnem usmerjevalniku. V nastavitev požarnega zidu dodamo vrstico:

# ACTION SOURCE DEST PROTO DEST PORT

# Preusmeri ves WWW promet z vrat 80 na vrata 8080

REDIRECT loc 8080 tcp www

Po tem popravku lahko brskalnik v krajevnem omrežju deluje na običajnih vratih 80, uporabniki pa nimajo nobenega stranskega izhoda mimo filtra, saj se ves spletni promet skozi preusmeritev odpelje naravnost v filter.

translated

$this->bbcode_second_pass_quote('', '

')Closing the side exits

In Figure 6 it is evident travel websites through TCP / IP port on the router transition . Dansguardian listening on port 8080 , this otherwise standard port for the proxy cache . If we want to use his services , we have reset your browser to the standard port 80 to port 8080th This should be done on all workstations . If you then want to close port 80 on transitional router that users would not be able to sneak past the filter through into the wild web, you should change the rules still there .

With much less effort and more twisted the same can be achieved by redirecting the door to transition the router. In the firewall settings , add the line :

# ACTION SOURCE DEST PROTO DEST PORT

# Redirect all WWW traffic to port 80 to port 8080

REDIRECT loc 8080 tcp www

After this correction, the browser on the local network works on the normal port 80 , users have no way out past the side of the filter , because all web traffic through conversion transported directly into the filter.

- getty232

- Posts: 7

- Joined: Fri Feb 14, 2014 12:04 pm